Visual-Inertial Event Dataset

Contact : Simon Klenk, Jason Chui, Publications.

TUM-VIE: The TUM Stereo Visual-Inertial Event Data Set

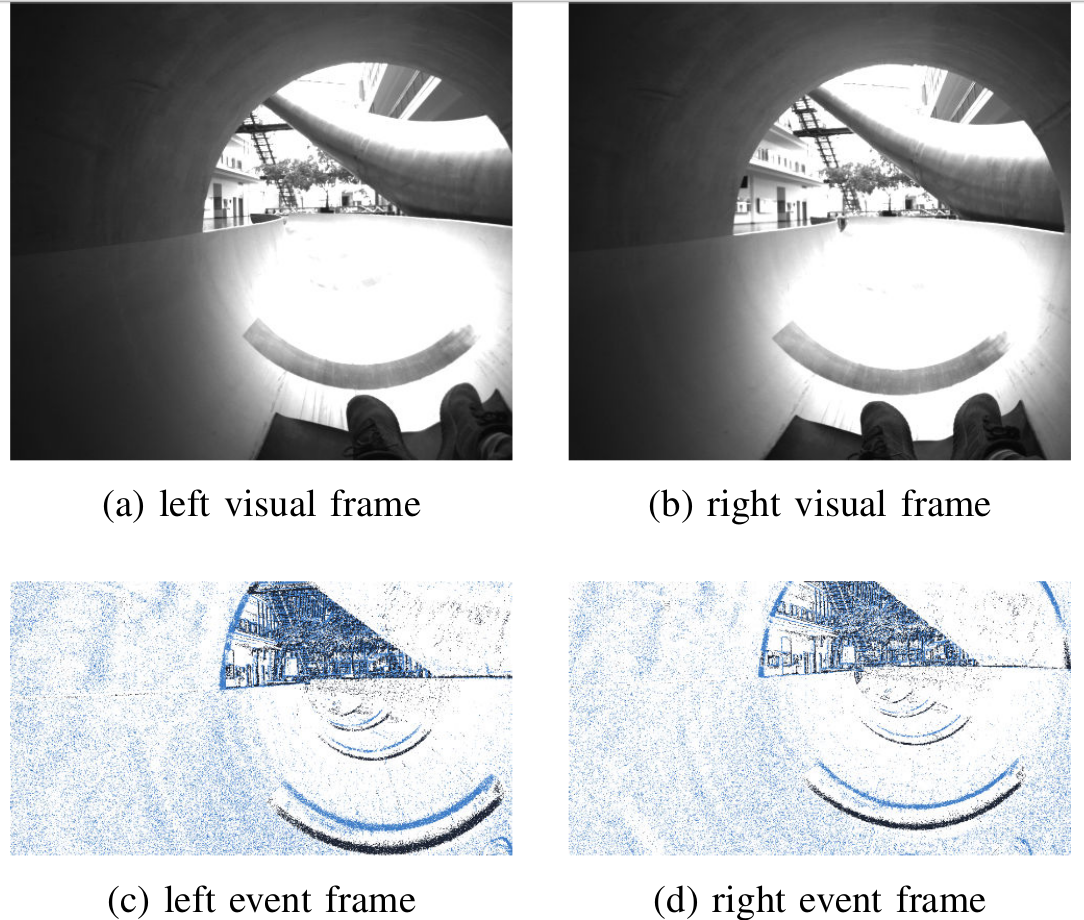

TUM-VIE is an event camera dataset for developing 3D perception and navigation algorithms. It contains handheld and head-mounted sequences in indoor and outdoor environments with rapid motion during sports and high dynamic range. TUM-VIE includes challenging sequences where state-of-the art VIO fails or results in large drift. Hence, it can help to push the boundary on event-based visual-inertial algorithms.

The dataset contains:

- Stereo event data Prophesee Gen4 HD (1280x720 pixels)

- Stereo grayscale frames at 20Hz (1024x1024 pixels)

- IMU data at 200Hz

- 6dof motion capture data at 120Hz (beginning and end of each sequence)

Timestamps between all sensors are synchronized in hardware.

Publication

Export as PDF, XML, TEX or BIB

Conference and Workshop Papers

2021

[]

TUM-VIE: The TUM Stereo Visual-Inertial Event Dataset , In International Conference on Intelligent Robots and Systems (IROS), 2021. ([project page])

Dataset Sequences

All sequences have consistent timestamps for visual cameras, IMU, event cameras. We report timestamps and exposure times in microseconds. IMU data is given as time(us), gx(rad/s), gy(rad/s), gz(rad/s),ax(m/s^2), ay(m/s^2), az(m/s^2), temperature.

Code

A minimal python example for using the data can be found here: https://tumevent-vi.vision.in.tum.de/access_visualize_h5.py.

An h5 file consits of:

- [events] which are stored as tuples of (x ,y , t_us, polarity).

- [ms_to_idx] which is a mapping to retrive the event array index corresponding to an integer milisecond. This is the same mapping as introduced in DSEC (see https://github.com/uzh-rpg/DSEC).

Calibration

We provide two different calibration files:

- CalibrationA: https://tumevent-vi.vision.in.tum.de/camera-calibrationA.json, valid for loop-floor0:3, mocap-desk, mocap-desk2, skate-easy. It is created from calibration sequence calibration_A1 and imu_calibration_A1.

- CalibrationB: https://tumevent-vi.vision.in.tum.de/camera-calibrationB.json, valid for all other sequences. It is created from calibration_B1 and imu_calibration_B1.

The camera-calibration files contain the intrinsics of all four cameras as well as the extrinsics T_imu_camN between camera N and imu, where N = {left visual camera, right visual camera, left event camera, right event camera}. We utilize the Kannala-Brandt camera model with a total of eight parameters (https://vision.in.tum.de/research/vslam/double-sphere).

The mocap-imu-calibration files contain extrinsics T_imu_marker between the mocap poses and imu poses.

- https://tumevent-vi.vision.in.tum.de/mocap-imu-calibrationA.json (valid for loop-floor0:3, mocap-desk, mocap-desk2, skate-easy)

- https://tumevent-vi.vision.in.tum.de/mocap-imu-calibrationB.json (valid for all other sequences)

Those are the camera calibration sequences which were used to find the intrinsics matrix of the cameras, as well as relative pose between all four cameras (recorded for each day of recording):

| Sequence name | Events Left | Events Right | Images, IMU |

| calibA1 | events-left(5.0GB) | events-right(5.0GB) | vi-data(1.6GB) |

| calibA2 | events-left(3.8GB) | events-right(3.7GB) | vi-data(1.1GB) |

| calibB1 | events-left(4.6GB) | events-right(4.5GB) | vi-data(1.5GB) |

| calibB2 | events-left(4.4GB) | events-right(4.3GB) | vi-data(1.3GB) |

Mocap-IMU calibration sequences were used to find the relative pose between cameras and imu.

| Sequence name | Events Left | Events Right | Images, IMU and GT-Poses |

| imu_calibA1 | events-left(3.4GB) | events-right(3.4GB) | vi-data(0.4GB) |

| imu_calibB1 | events-left(4.2GB) | events-right(4.2GB) | vi-data(0.5GB) |

| imu_calibB2 | events-left(5.7GB) | events-right(5.7GB) | vi-data(0.5GB) |

The Vignette calibration can be useful for direct visual odometry approaches.

| Sequence name | Vignette Left | Vignette Right | Images and IMU |

| vignette_calibration_1 | vignette-left.png | vignette-right.png | vi-data(0.3GB) |

| vignette_calibration_2 | vignette-left.png | vignette-right.png | vi-data(0.4GB) |

| vignette_calibration_3 | vignette-left.png | vignette-right.png | vi-data(0.5GB) |

Tonic format

There exists a Tonic implementation of our dataset: https://tonic.readthedocs.io/en/latest/reference/datasets.html#tum-vie. Tonic is an event-based library which enables easy usage of our dataset in Python.

Rosbag

We do not recommend the rosbag format for high-resolution event data, as it is very memory-inefficient - most of our sequences are more than 50GB as rosbag! However, in case your application depends on the rosbag format, we point you to the code of Suman Ghosh for a conversion: https://github.com/tub-rip/events_h52bag (note that we do not employ a t_offset).

Sample Videos Event Stream

We provide a few selected sequences as avi video, using an accumulation time of 10 milliseconds. White pixels represent positive events, black pixels negative events and gray pixels no change.

| Sequence name | AVI video (left events) | AVI video (right events) |

| running-easy | running-easy-left (1.2GB) | running-easy-right (1.2GB) |

| slide | slide-left (3.2GB) | slide-right (3.2GB) |

| bike-night-left | bike-night-left (4.8GB) | running-easy-right (4.8GB) |

License

All data in the TUM-VIE Dataset is licensed under a Creative Commons 4.0 Attribution License (CC BY 4.0) and the accompanying source code is licensed under a BSD-2-Clause License.