Ganlin Zhang

PhD StudentTechnical University of MunichSchool of Computation, Information and Technology

Informatics 9

Boltzmannstrasse 3

85748 Garching

Germany

Tel: +49-89-289-17782

Fax: +49-89-289-17757

Office: 02.09.055

Mail: ganlin.zhang@tum.de

Personal homepage: https://ganlinzhang.xyz

Research Interests

3D Vision, Visual SLAM, Structure from Motion, 3D Reconstrution.

Publications

Export as PDF, XML, TEX or BIB

Journal Articles

2025

[]

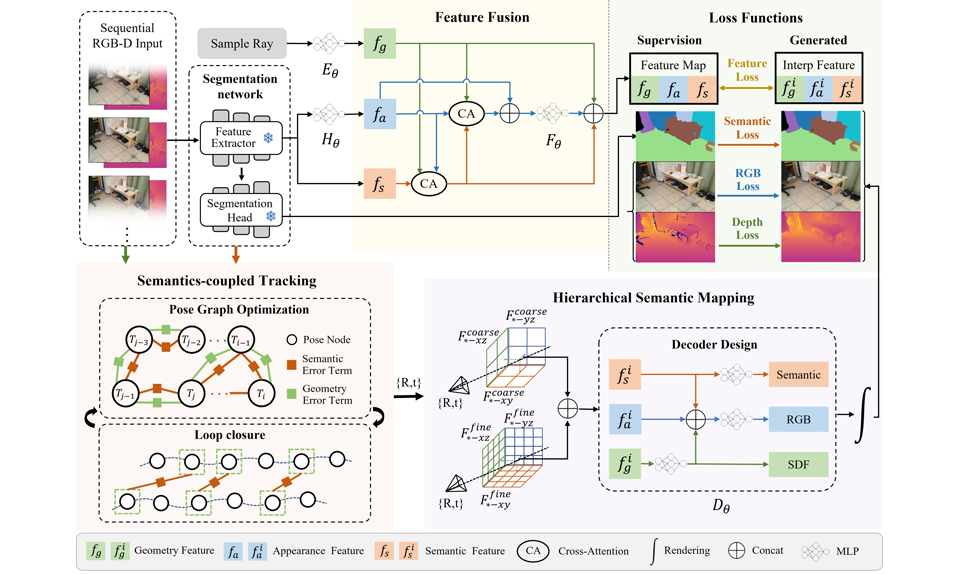

SNI-SLAM++: Tightly-coupled Semantic Neural Implicit SLAM , In IEEE transactions on pattern analysis and machine intelligence, 2025. ([project page])

Preprints

2024

[]

GlORIE-SLAM: Globally Optimized Rgb-only Implicit Encoding Point Cloud SLAM , In arXiv preprint arXiv:2403.19549, 2024. ([project],[code])

Conference and Workshop Papers

2026

[]

ViSTA-SLAM: Visual SLAM with Symmetric Two-view Association , In 3DV, 2026. ([project page] [code])

2025

[]

Back on Track: Bundle Adjustment for Dynamic Scene Reconstruction , In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 2025. ([project page])

Best Paper Candidate []

Splat-slam: Globally optimized rgb-only slam with 3d gaussians , In Proceedings of the Computer Vision and Pattern Recognition Conference (CVPR) Workshops, 2025. ([code])

2023

[]

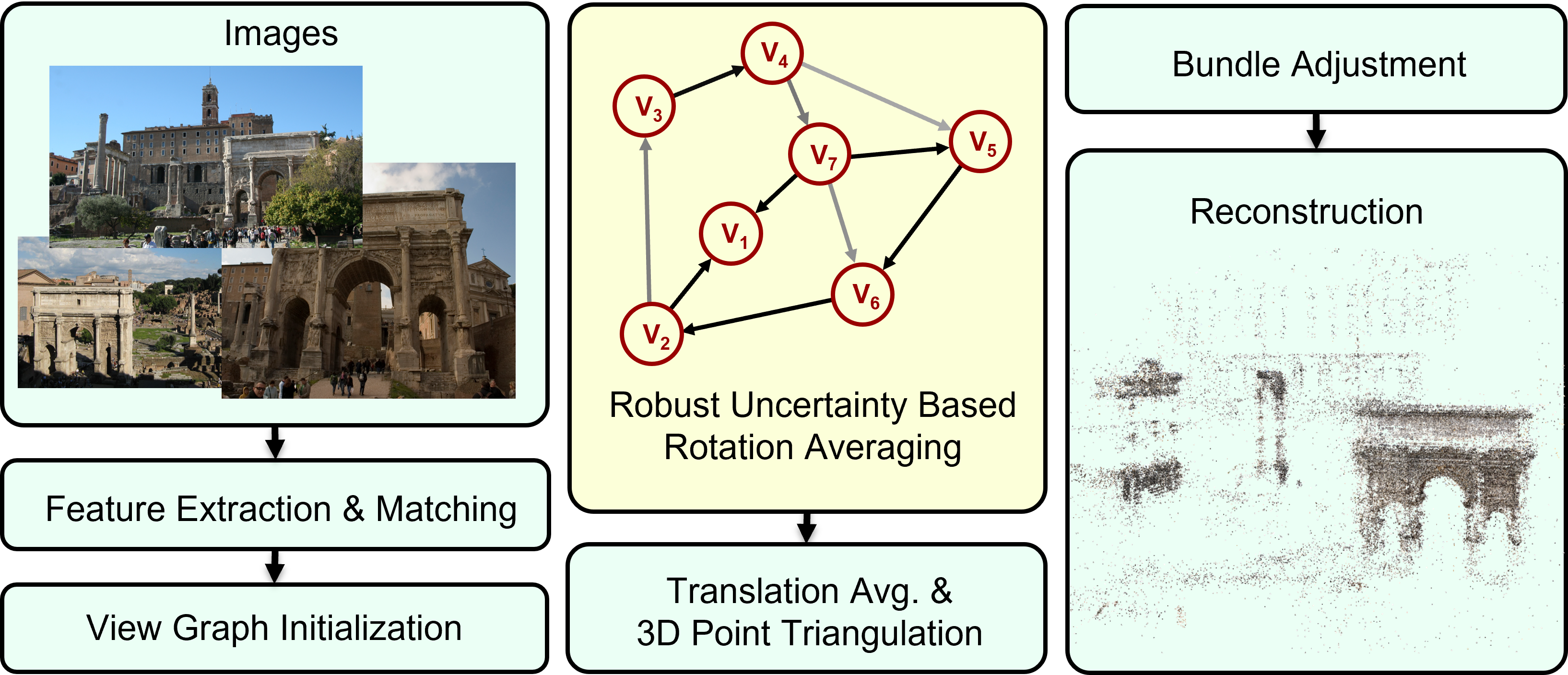

Revisiting Rotation Averaging: Uncertainties and Robust Losses , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023. ([code])