Johannes Michael Meier

PhD StudentTechnical University of MunichSchool of Computation, Information and Technology

Informatics 9

Boltzmannstrasse 3

85748 Garching

Germany

Fax: +49-89-289-17757

Mail: J.Meier@tum.de

Brief Bio

Ph.D. Student in Computer Vision at TUM (headed by Prof. Dr. Daniel Cremers). My PhD is done in collaboration with DeepScenario. In 2025 I did a 4-month research visit at ETH Zurich (group of Marc Pollefeys).

My research focuses on monocular 3D object detection, tracking, semi-supervised learning, domain adaptation, and few-shot learning.

I hold an M.Sc. in Computer Science from the University of Tübingen. During my studies, I worked at the Max Planck Institute for Intelligent Systems (MPI-IS) Tübingen. My Master's Thesis (in collaboration with Bosch Research) was supervised by Prof. Dr. Andreas Geiger.

Publications

Export as PDF, XML, TEX or BIB

Conference and Workshop Papers

2026

[]

IDEAL-M3D: Instance Diversity-Enriched Active Learning for Monocular 3D Detection , In IEEE Winter Conference on Applications of Computer Vision (WACV), 2026.

[]

GrounDiff: Diffusion-Based Ground Surface Generation from Digital Surface Models , In IEEE Winter Conference on Applications of Computer Vision (WACV), 2026. ([project page])

2025

[]

LeAD-M3D: Leveraging Asymmetric Distillation for Real-time Monocular 3D Detection , In arXiv, 2025. ([project page])

[]

OrthoLoC: UAV 6-DoF Localization and Calibration Using Orthographic Geodata , In 39th Conference on Neural Information Processing Systems (NeurIPS) Datasets and Benchmarks Track, 2025. ([project page])

Oral Presentation []

CoProU-VO: Combining Projected Uncertainty for End-to-End Unsupervised Monocular Visual Odometry , In 47th German Conference on Pattern Recognition (GCPR), 2025. ([project page])

Oral Presentation - Best Paper Award []

Shape Your Ground: Refining Road Surfaces Beyond Planar Representations , In IEEE Intelligent Vehicles Symposium, 2025. ([project page])

[]

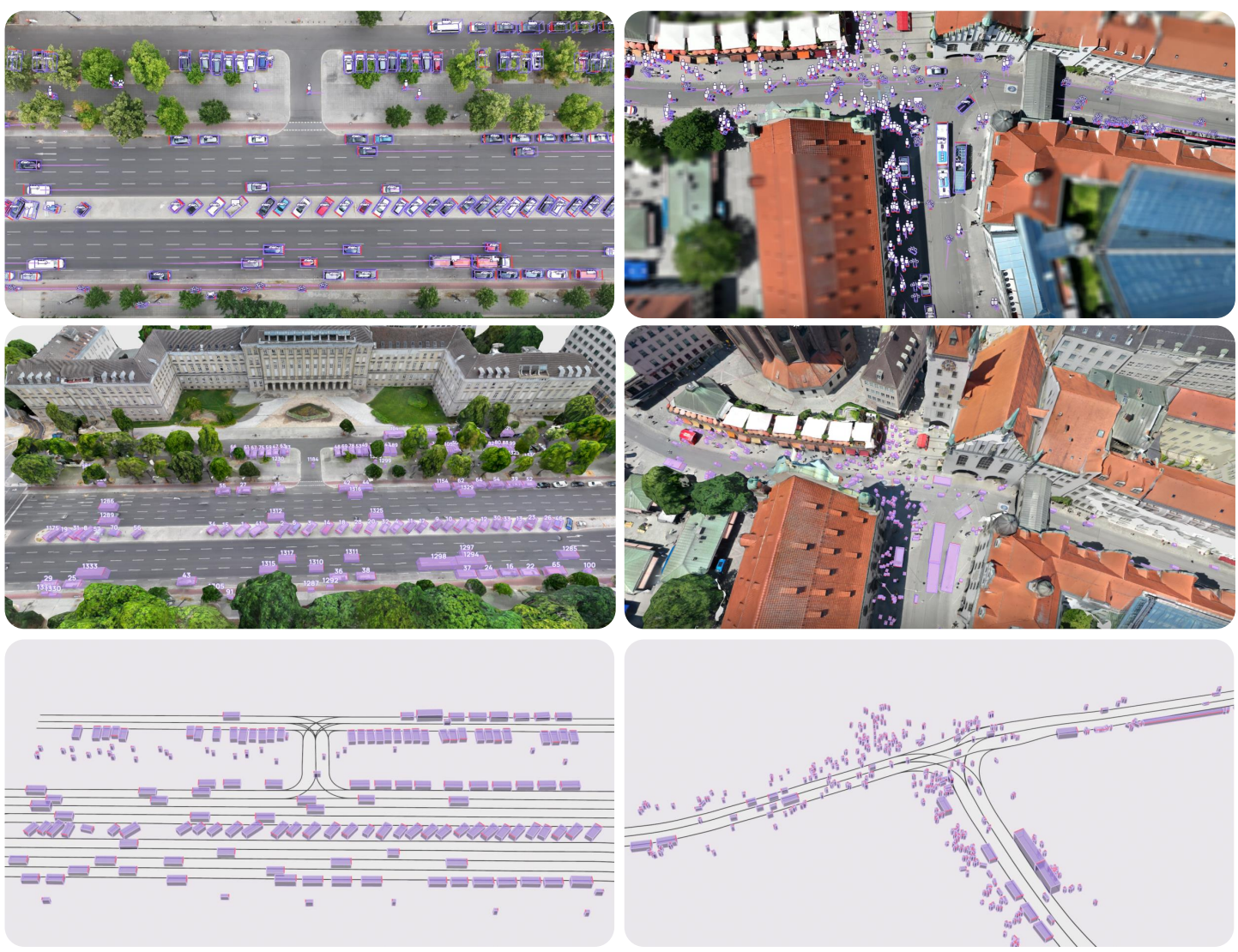

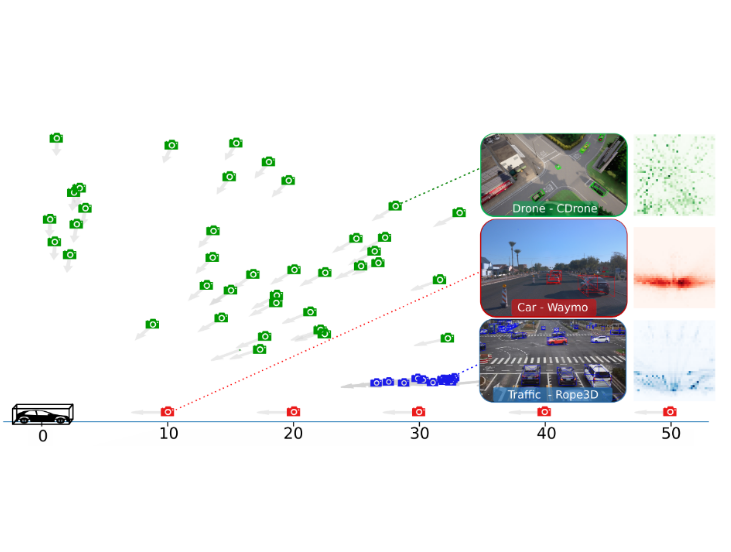

Highly Accurate and Diverse Traffic Data: The DeepScenario Open 3D Dataset , In IEEE Intelligent Vehicles Symposium, 2025. ([project page])

[]

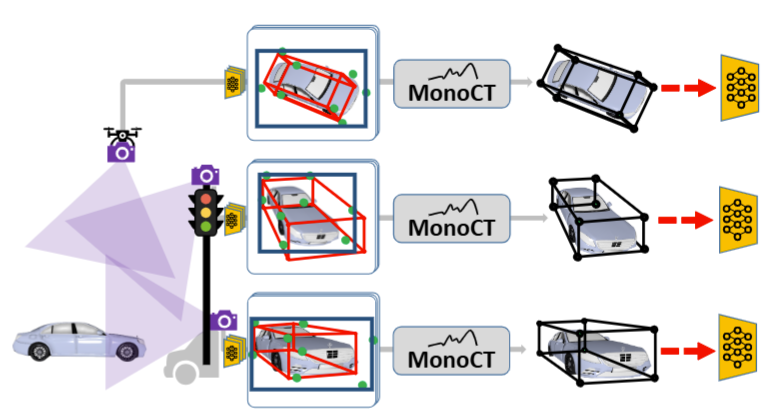

MonoCT: Overcoming Monocular 3D Detection Domain Shift with Consistent Teacher Models , In International Conference on Robotics and Automation (ICRA), 2025.

Oral Presentation

2024

[]

CARLA Drone: Monocular 3D Object Detection from a Different Perspective , In 46th German Conference on Pattern Recognition (GCPR), 2024. ([project page])

Oral Presentation

2023

[]

NIFF: Alleviating Forgetting in Generalized Few-Shot Object Detection via Neural Instance Feature Forging , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2023.

2021

[]

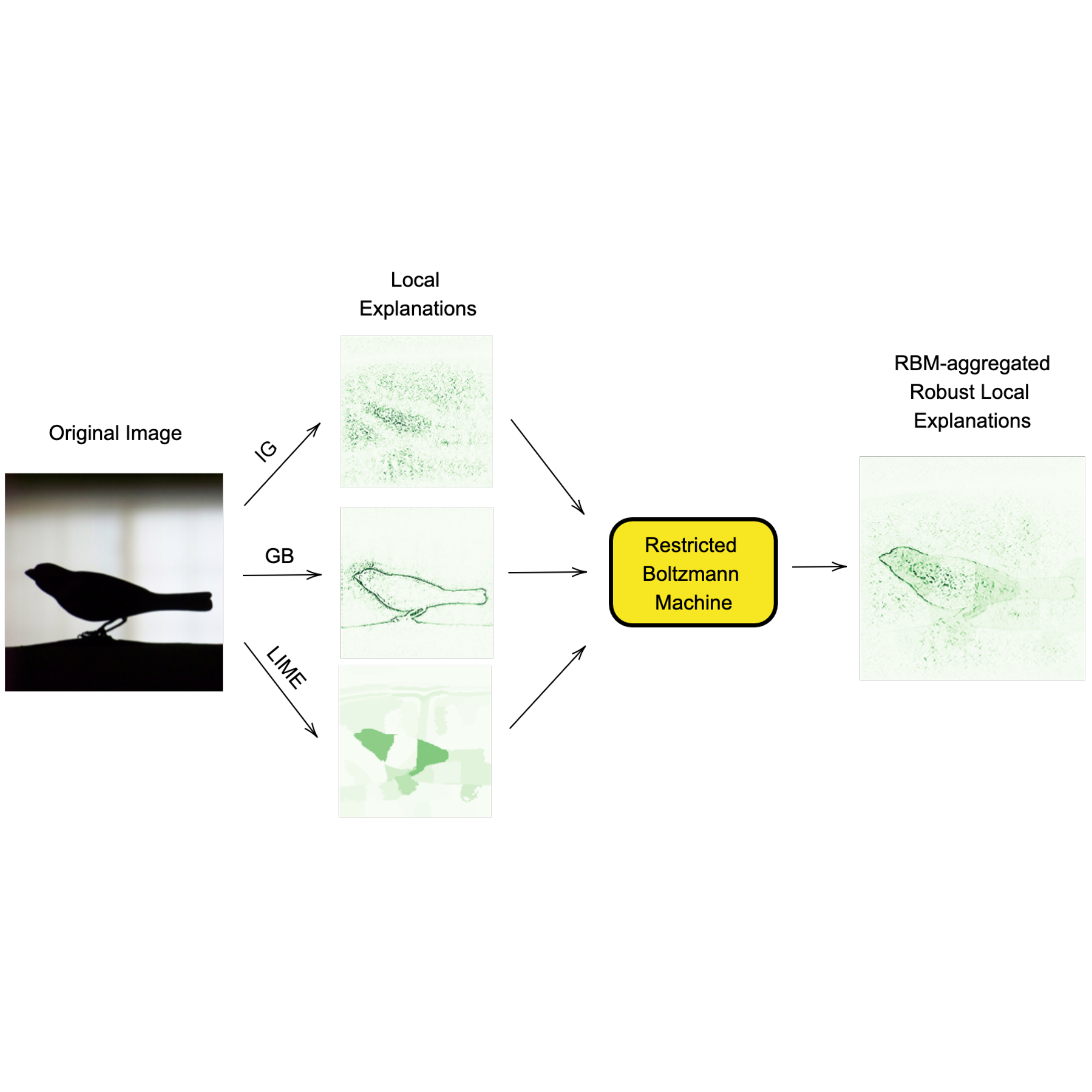

A Robust Unsupervised Ensemble of Feature-Based Explanations using Restricted Boltzmann Machines , In Neural Information Processing Systems Conference - NeurIPS 2021: eXplainable AI approaches for debugging and diagnosis workshop, 2021.