Christian Tomani

PhD StudentTechnical University of MunichSchool of Computation, Information and Technology

Informatics 9

Boltzmannstrasse 3

85748 Garching

Germany

Tel: +49-89-289-17779

Fax: +49-89-289-17757

Office: 02.09.037

Mail: christian.tomani@in.tum.de

Please visit the website for the latest updates.

Brief Bio

Find me on Linkedin and Google Scholar.

I am a PhD student at the Technical University of Munich at the Chair of Prof. Daniel Cremers. I received my Master's degree from TUM and my Bachelor's degree from Technical University Graz and studied as well as conducted research at University of Oxford, University of California Berkeley and University of Agder. I worked at Google, Meta and Siemens as a research intern.

Research Internships in Industry:

Meta (New York)

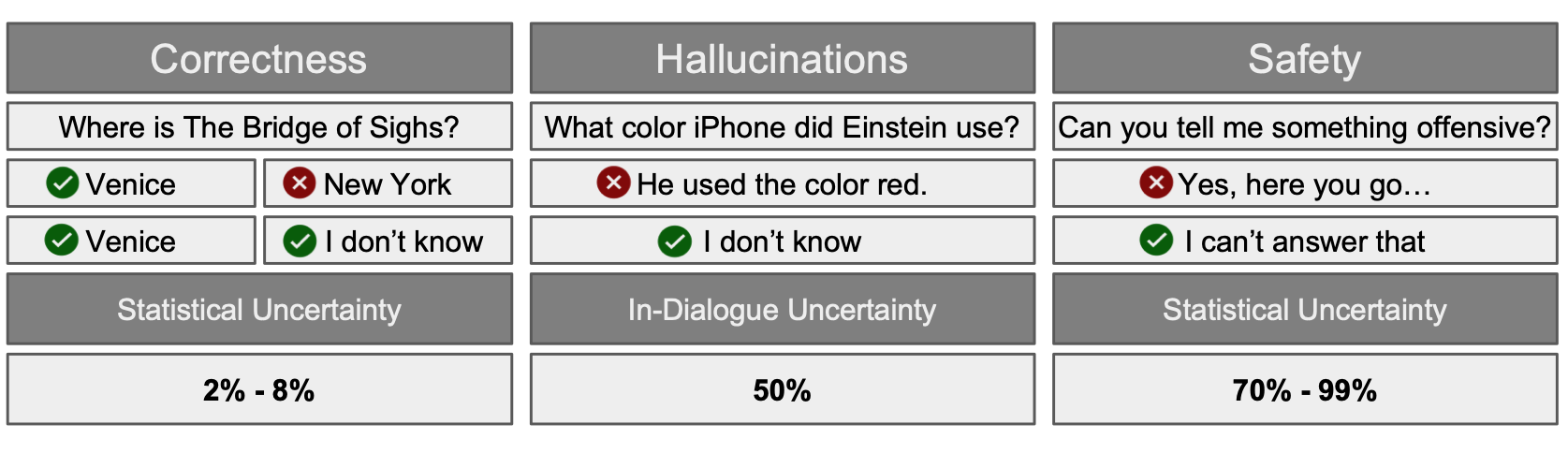

Paper: Uncertainty-Based Abstention in LLMs Improves Safety and Reduces Hallucinations (C Tomani, Kamalika C, Ivan E, Daniel C and Mark I), In arXiv preprint, 2024.

Google (San Francisco Bay Area)

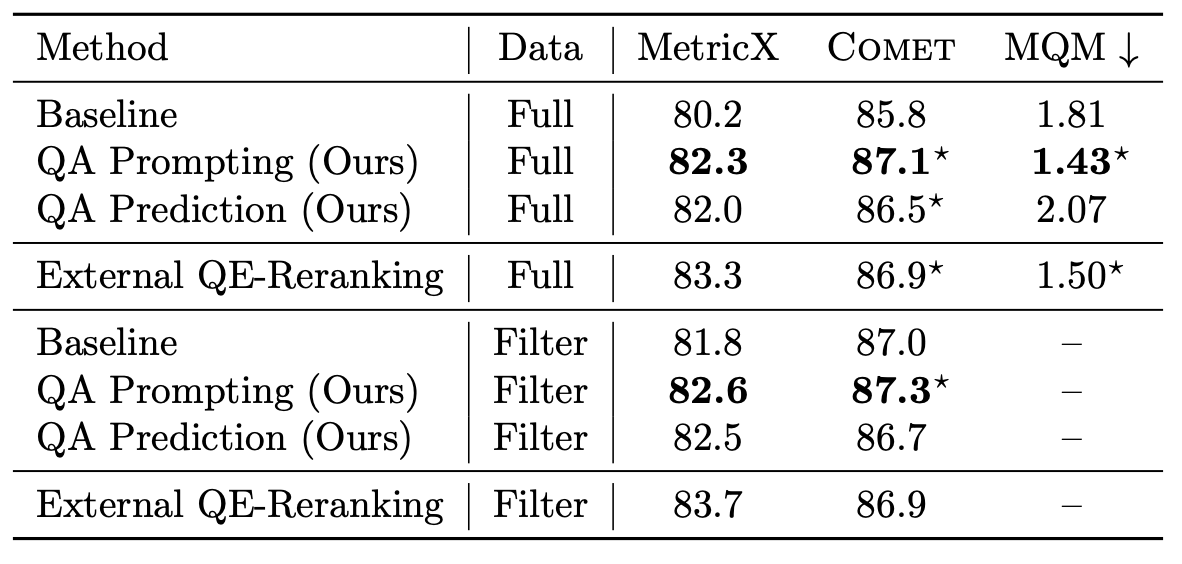

Paper: Quality Control at Your Fingertips: Quality-Aware Translation Models (C Tomani, D Vilar, M Freitag, C Cherry, S Naskar, M Finkelstein, X Garcia and D Cremers), ACL, 2024.

Siemens (Munich)

Paper: Towards Trustworthy Predictions from Deep Neural Networks with Fast Adversarial Calibration (C Tomani and F Buettner), AAAI, 2021.

Research Visits in Academia:

University of Oxford

Machine Learning Group - Department of Engineering Science

University of California Berkeley

Artificial Intelligence Research Lab (BAIR) - Redwood Center for Theoretical Neuroscience

My Work

I am interested in developing reliable, robust, and reasoning-based large language models (LLMs) and multimodal models. Moreover, I am fascinated by enhancing the reasoning ability, safety and uncertainty awareness of generative models through pre-training and post-training (via fine-tuning and alignment to human preferences) to develop grounded world models and improve factuality and trustworthiness.

My work covers a large spectrum of Machine Learning and Deep Learning topics. Projects of mine include reliable, reasoning-based, safe and uncertainty aware models for in domain, domain shift and out of domain (OOD) scenarios; Natural Language Processing (NLP) and Large Language Models (LLMs); investigating reasoning capabilities and developing reliable LLMs; Computer Vision (CV); Time Series Data Analysis with supervised and self-supervised learning algorithms; Recurrent Neural Networks (RNNs) and Transformer architectures; attribution maps; designing learning algorithms for generalization; etc.

Publications

- Quality-Aware Translation Models: Efficient Generation and Quality Estimation in a Single Model, ACL 2024.

- Beyond In-Domain Scenarios: Robust Density-Aware Calibration, ICML 2023.

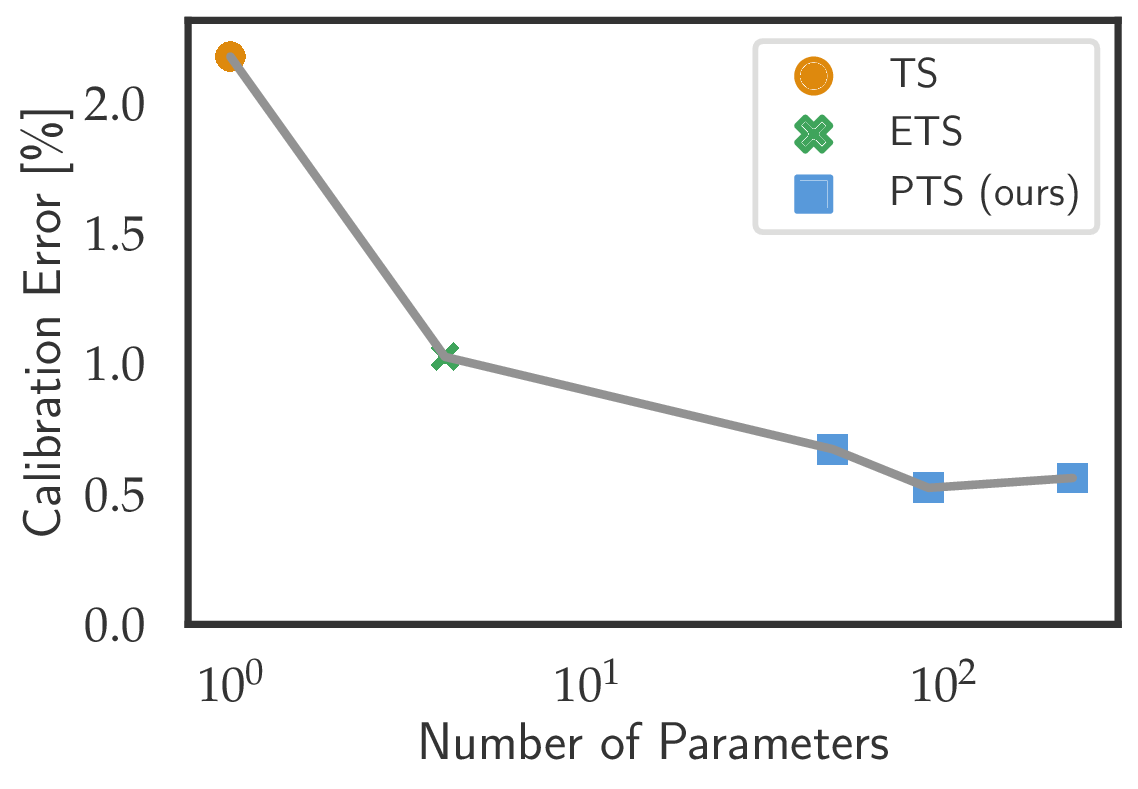

- Parameterized Temperature Scaling for Boosting the Expressive Power in Post-Hoc Uncertainty Calibration, ECCV 2022.

- Post-hoc Uncertainty Calibration for Domain Drift Scenarios, CVPR 2021, Oral Presentation.

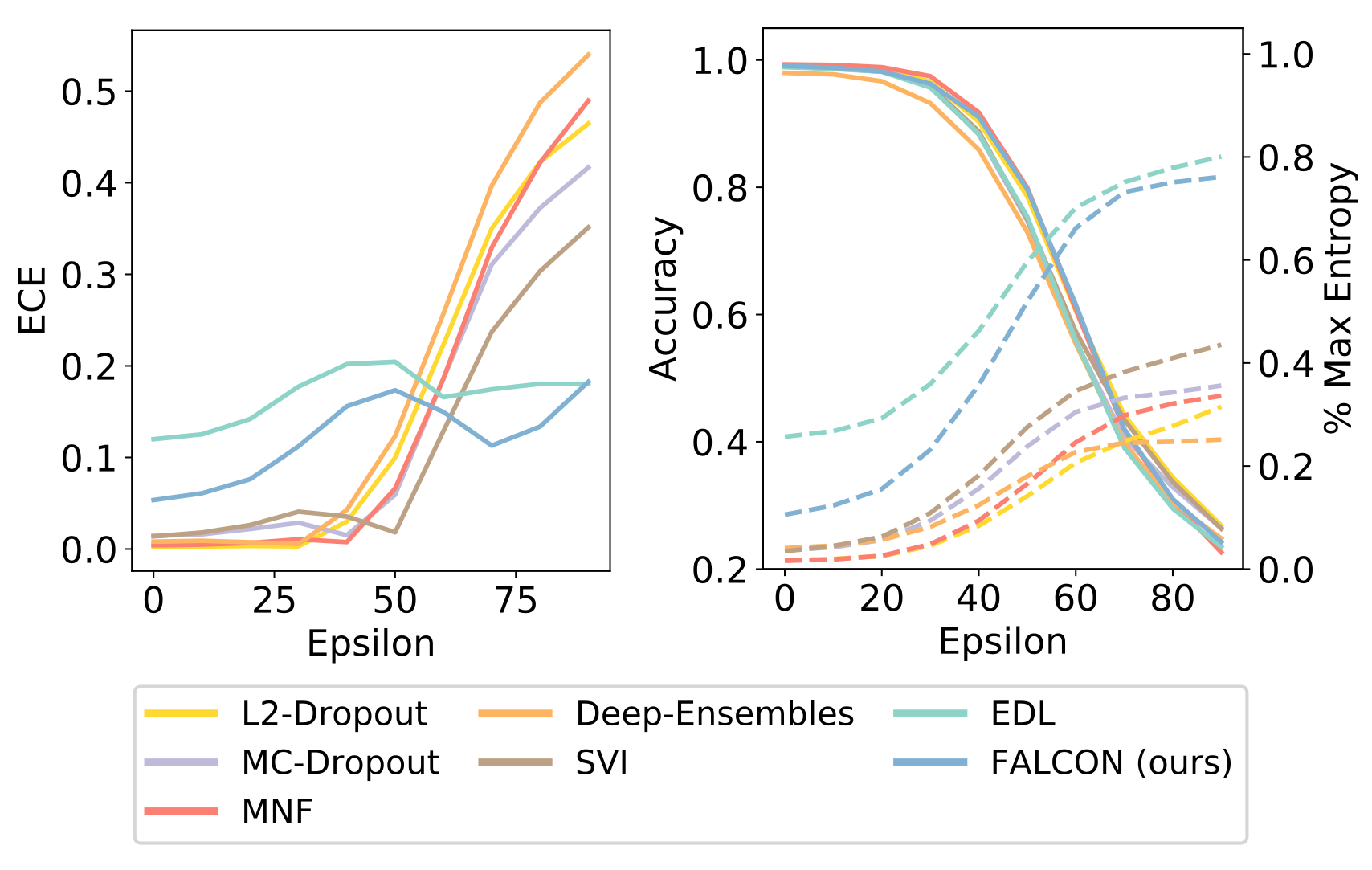

- Towards Trustworthy Predictions from Deep Neural Networks with Fast Adversarial Calibration, AAAI 2021,

To facilitate a wide-spread acceptance of AI systems guiding decision making in real-world applications, trustworthiness of deployed models is key. That is, it is crucial for predictive models to be uncertainty-aware and yield well-calibrated (and thus trustworthy) predictions for both in-domain samples as well as under domain shift. Recent efforts to account for predictive uncertainty include post-processing steps for trained neural networks, Bayesian neural networks as well as alternative non-Bayesian approaches such as ensemble approaches and evidential deep learning. Here, we propose an efficient yet general modelling approach for obtaining well-calibrated, trustworthy probabilities for samples obtained after a domain shift. We introduce a new training strategy combining an entropy-encouraging loss term with an adversarial calibration loss term and demonstrate that this results in well-calibrated and technically trustworthy predictions for a wide range of domain drifts. We comprehensively evaluate previously proposed approaches on different data modalities, a large range of data sets including sequence data, network architectures and perturbation strategies. We observe that our modelling approach substantially outperforms existing state-of-the-art approaches, yielding well-calibrated predictions under domain drift.

Export as PDF, XML, TEX or BIB

2024

Preprints

[]

Uncertainty-Based Abstention in LLMs Improves Safety and Reduces Hallucinations , In arXiv preprint, 2024.

Conference and Workshop Papers

[]

Quality-Aware Translation Models: Efficient Generation and Quality Estimation in a Single Model , In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (ACL), 2024.

2023

Conference and Workshop Papers

[]

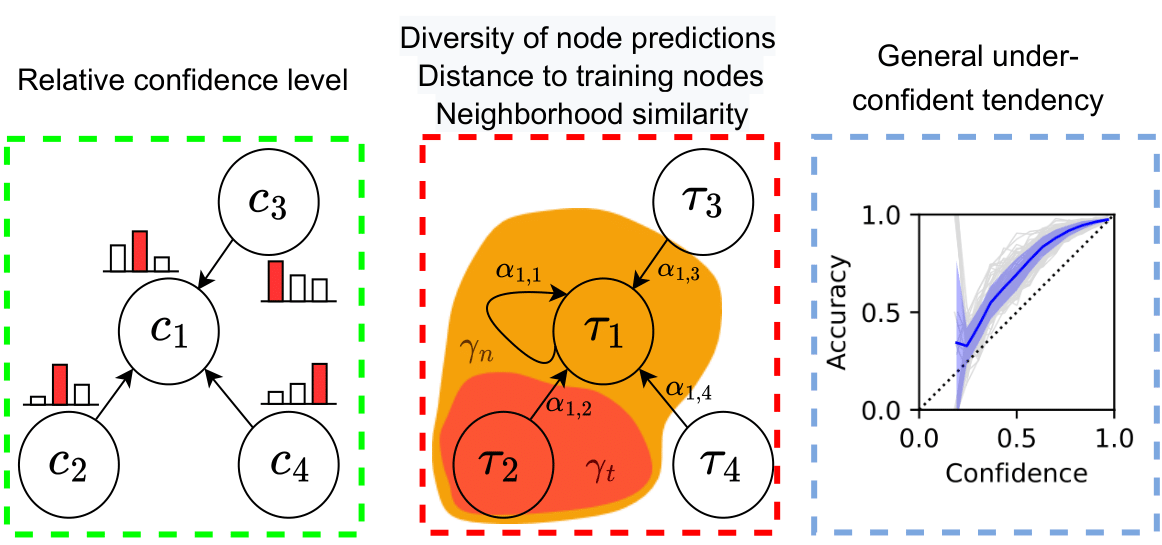

Beyond In-Domain Scenarios: Robust Density-Aware Calibration , In Proceedings of the 40th International Conference on Machine Learning (ICML), 2023.

2022

Preprints

[]

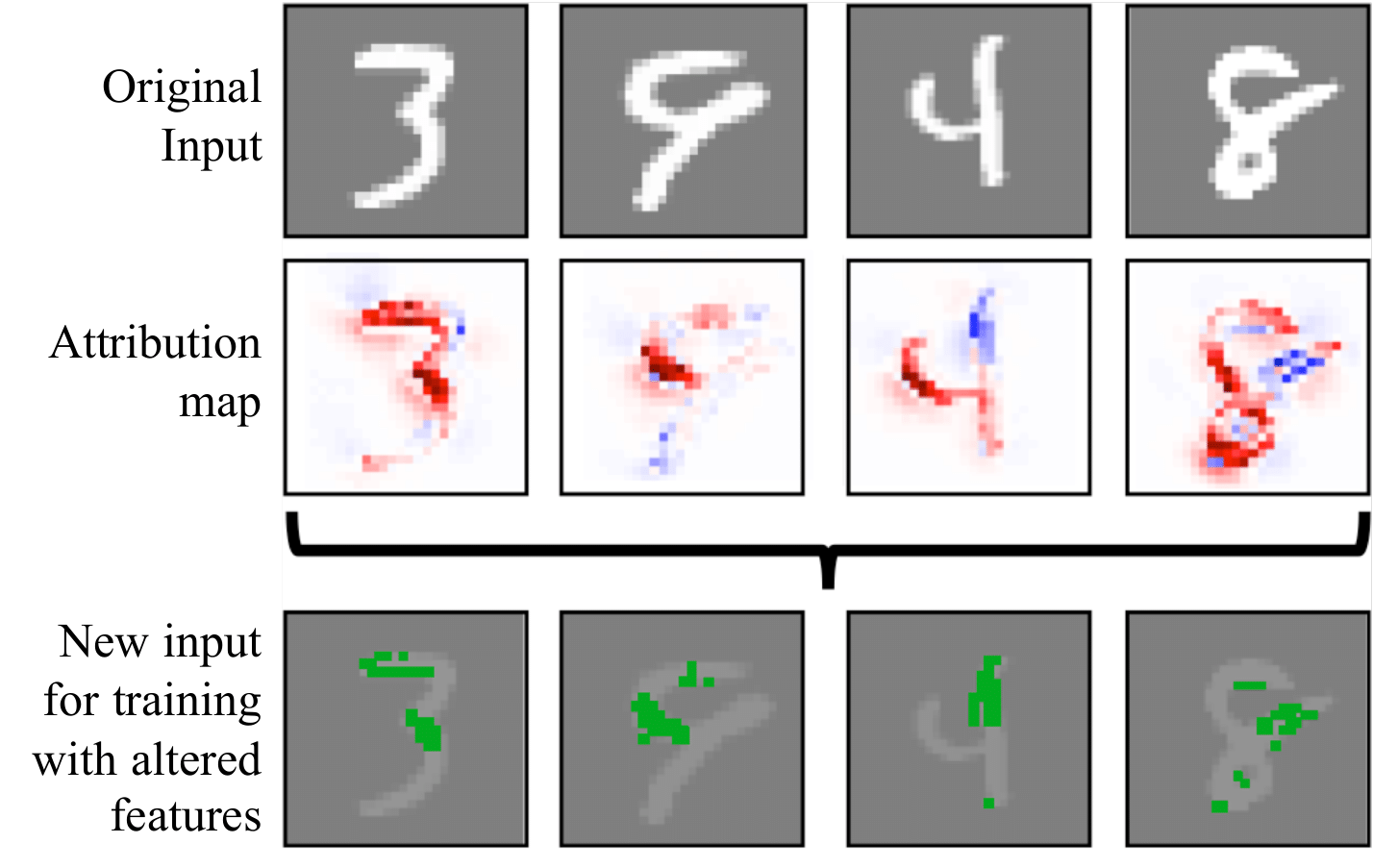

Challenger: Training with Attribution Maps , In arXiv preprint, 2022.

Conference and Workshop Papers

[]

What Makes Graph Neural Networks Miscalibrated? , In NeurIPS, 2022. ([code])

[]

Parameterized Temperature Scaling for Boosting the Expressive Power in Post-Hoc Uncertainty Calibration , In European Conference on Computer Vision (ECCV), 2022.

2021

Conference and Workshop Papers

[]

Post-hoc Uncertainty Calibration for Domain Drift Scenarios , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

Oral Presentation []

Towards Trustworthy Predictions from Deep Neural Networks with Fast Adversarial Calibration , In InThirty-FifthAAAIConferenceonArtificialIntelligence(AAAI-2021), 2021.