Convex Optimization for Machine Learning and Computer Vision (IN2330) (2h + 2h, 6 ECTS)

Announcement:

The retake exam has been re-scheduled on July 8.

The retake exam is unfortunately canceled due to the Coronavirus outbreak.

The location of the final exam is updated.

There is no lecture on 23.12. The tutorial session on 08.01 will be replaced by the lecture.

There will be no lecture/tutorial on 09.12 and 11.12 due to NeurIPS conference.

The tutorial on 30.10 will be given by Yuesong Shen

The first lecture takes place on 21.10. There will be no lectures on 14.10 and 16.10.

Introduction:

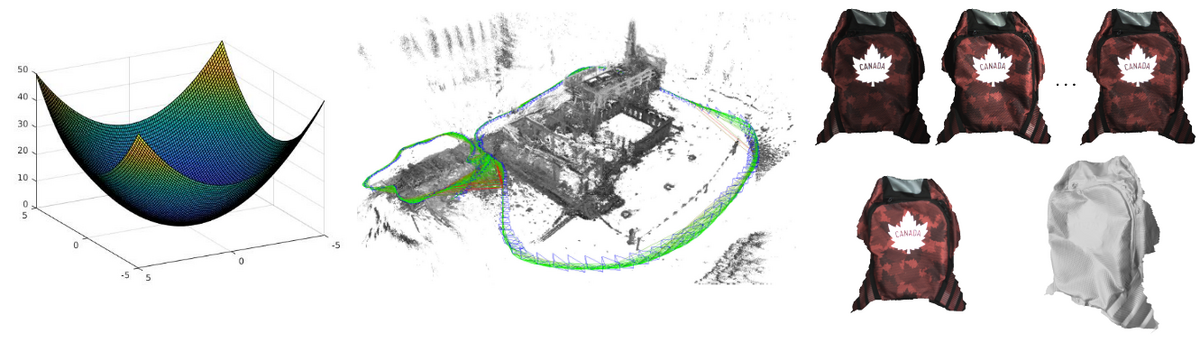

Many important machine learning and computer vision tasks can be formulated as convex optimization problems, e.g. training of SVMs, logistic regression, low-rank and sparse matrix decomposition, image segmentation, stereo matching, surface reconstruction, etc. In this lecture we will discuss first-order convex optimization methods to solve the aforementioned problems efficiently. Particular attention will be paid to problems including constraints and non-differentiable terms, giving rise to methods that exploit the concept of duality such as the primal-dual hybrid gradient method and the alternating directions methods of multipliers. This lecture will cover the mathematical background needed to understand why these methods converge as well as the details of their efficient implementation.

We will cover the following topics:

Elements in convex analysis

- Convex set and convex function

- Existence and uniqueness of minimizers

- Subdifferential

- Convex conjugate

- Duality theory

- Moreau envelope

Numerical methods

- Gradient-based methods

- Proximal algorithms: primal-dual hybrid gradient method, alternating direction method of multipliers

- Unified convergence analysis

- Acceleration techniques

Examplary applications in machine learning and computer vision include

- Logistic regression

- Training of SVMs

- Image reconstruction (e.g. denoising, inpainting, segmentation)

- Low-rank and sparse matrix decomposition

We will implement some of them in Python and MATLAB.

Lecture

Location: Room 02.09.023

Time and Date: Monday 16:15 – 18:00

Start: October 21st, 2019

Lecturer: Dr. Tao Wu

The lecture is held in English.

Exercise

Location: Room 02.09.023

Time and Date: Wednesday 12:15 – 14:00

Start: October 23rd, 2019

Organization: Zhenzhang Ye

The exercise sheets consist of two parts, theoretical and programming exercises.

Exercise sheets will be posted every Monday and are due a week later. You will have one week to do the exercises.

Please submit the programming solutions as a zip file with filename "matriculationnumber_firstname_lastname.zip" only! containing your code-files (no material files) via email to yez@in.tum.de, and hand in the solutions to the theoretical part in Monday's lecture.

We will give you back the corrected sheets on Wednesday when we discuss them in class.

Please remember to write clean, commented(!) code! You are allowed to work on the exercise sheets in groups of two students.

The exercise sheets can be accessed here.

Exam Bonus

To achieve the bonus, you have to meet two requirements:

1. get at least 75% grades of all exercises totally, i.e. sum of the grades you get in all exercises divided by the grades of all exercises (without bonus) should be >= 0.75

2. present your theoretical solution during tutorial at least once in this semester.

You cannot improve either 1.0 or 5.0.

Exam

Date: February 28th, 17:00 - 18:30.

Place: MW 0350, Egbert-von-Hoyer-Hörsaal (5503.EG.350).

The final exam will be written. You are allowed to bring one A4-page, handwritten cheat sheet to the exam.

Retake Exam

Date: July 8th, 10:45 - 12:15.

Place: 0.002, Tentomax HS2 (5538.EG.002)

The exam is written. You are allowed to bring one A4-size, double-sided, handwritten cheat sheet to the exam.

Lecture Materials

Course materials (slides and exercise sheets/solutions) can be accessed here.

Send us an email if you need the password.