Visual-Inertial Dataset

Contact : David Schubert, Nikolaus Demmel, Vladyslav Usenko.

The TUM VI Benchmark for Evaluating Visual-Inertial Odometry

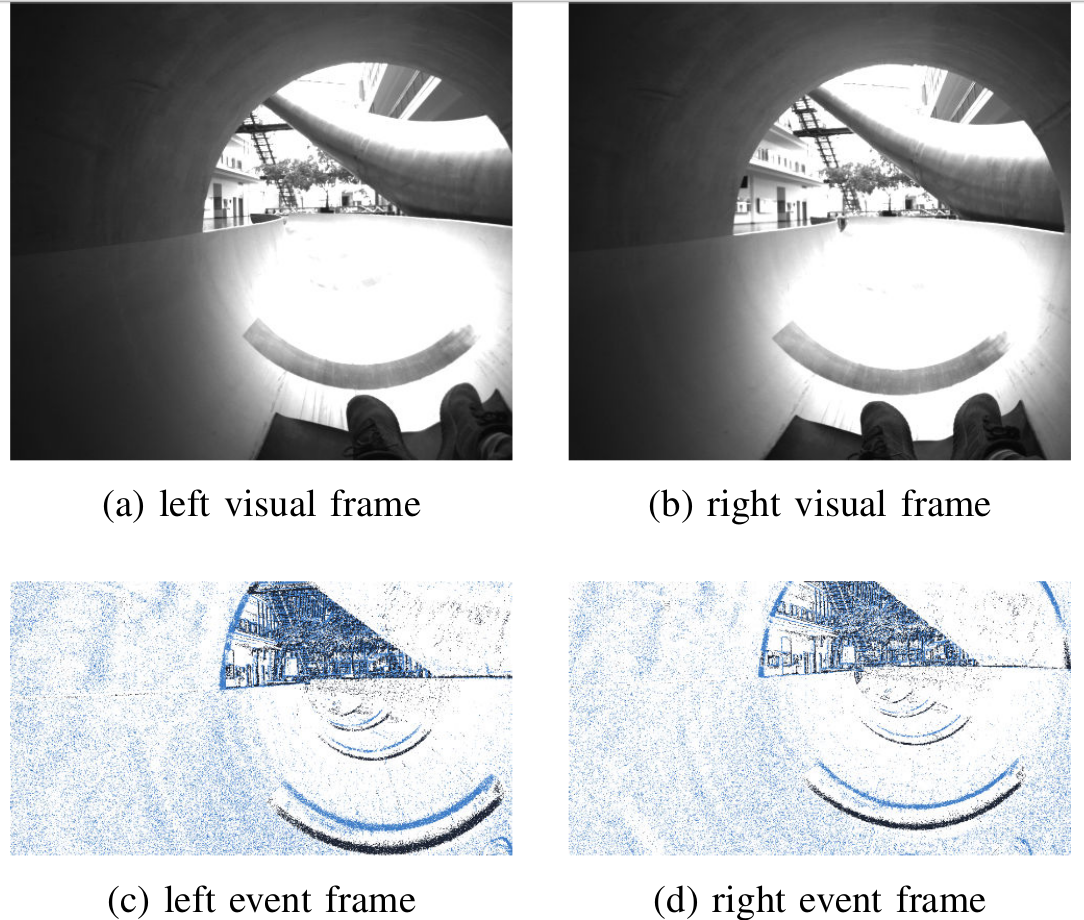

Visual odometry and SLAM methods have a large variety of applications in domains such as augmented reality or robotics. Complementing vision sensors with inertial measurements tremendously improves tracking accuracy and robustness, and thus has spawned large interest in the development of visual-inertial (VI) odometry approaches. In this paper, we propose the TUM VI benchmark, a novel dataset with a diverse set of sequences in different scenes for evaluating VI odometry. It provides camera images with 1024x1024 resolution at 20Hz, high dynamic range and photometric calibration. An IMU measures accelerations and angular velocities on 3 axes at 200Hz, while the cameras and IMU sensors are time-synchronized in hardware. For trajectory evaluation, we also provide accurate pose ground truth from a motion capture system at high frequency (120Hz) at the start and end of the sequences which we accurately aligned with the camera and IMU measurements. The full dataset with raw and calibrated data will be made publicly available. We also evaluate state-of-the-art VI odometry approaches on our dataset.

Export as PDF, XML, TEX or BIB

Conference and Workshop Papers

2021

[]

TUM-VIE: The TUM Stereo Visual-Inertial Event Dataset , In International Conference on Intelligent Robots and Systems (IROS), 2021. ([project page])

2018

[]

The TUM VI Benchmark for Evaluating Visual-Inertial Odometry , In International Conference on Intelligent Robots and Systems (IROS), 2018. ([arxiv])

Dataset

All files are available for batch download at:

- Bag 1024x1024: https://vision.in.tum.de/tumvi/calibrated/1024_16/

- Bag 512x512: https://vision.in.tum.de/tumvi/calibrated/512_16/

- Euroc/DSO 1024x1024: https://vision.in.tum.de/tumvi/exported/euroc/1024_16/

- Euroc/DSO 512x512: https://vision.in.tum.de/tumvi/exported/euroc/512_16/

Example script to download a whole folder:

wget -R "index.*" -m -np -nH --no-check-certificate -e robots=off \

https://cdn3.vision.in.tum.de/tumvi/exported/euroc/512_16/

# optionally verify md5 sums:

cd tumvi/exported/euroc/512_16

md5sum -c *.md5

Dataset Sequences

We provide several types of sequences for evaluating the Visual-Intertial odometry algorithms:

- room : Sequences in the room with Motion Capture system, where ground truth poses are available for the entire trajectory.

- corridor : Sequences in the corridor and several offices where ground truth poses are available for the start and end segments.

- magistrale : Sequences in the large hall. Ground truth poses are available for the start and end segments.

- outdoors : Outdoor sequences. Ground truth poses are available for the start and end segments.

- slides : Specially changeling sequences which contain sliding down in a closed tube with very bad illumination inside. Ground truth poses are available for the start and end segments.

All sequences have been processed to have:

- consistent timestamps for camera, IMU, and ground truth poses.

- proper IMU scaling and axis alignment.

- ground truth poses in the IMU frame.

- some ground truth outliers removed with a simple median filter.

Calibration Sequences

We provide several types of calibration sequences:

- cam-calib : for camera calibration, i.e. for the intrinsic camera parameters and the relative pose between two cameras. The grid of AprilTags has been recorded at low frame rate with changing viewpoints.

- imu-calib : calibration sequence to find the relative pose between cameras and imu. Includes rapid motions in front of the April grid exciting all 6 degrees of freedom. A smaller exposure has been chosen to avoid motion blur.

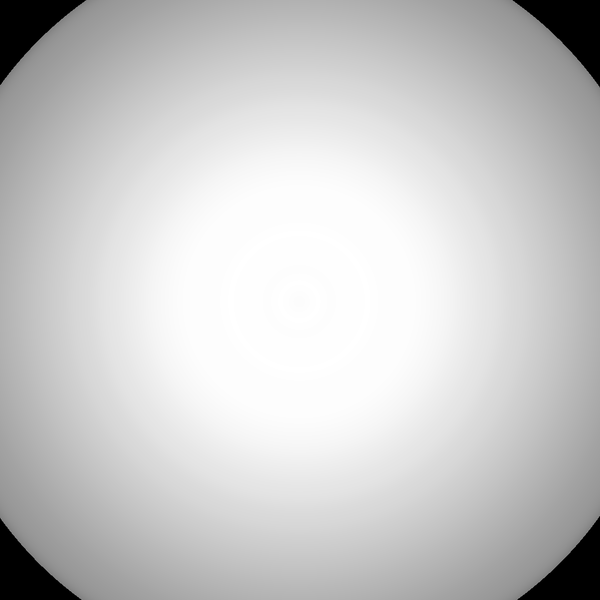

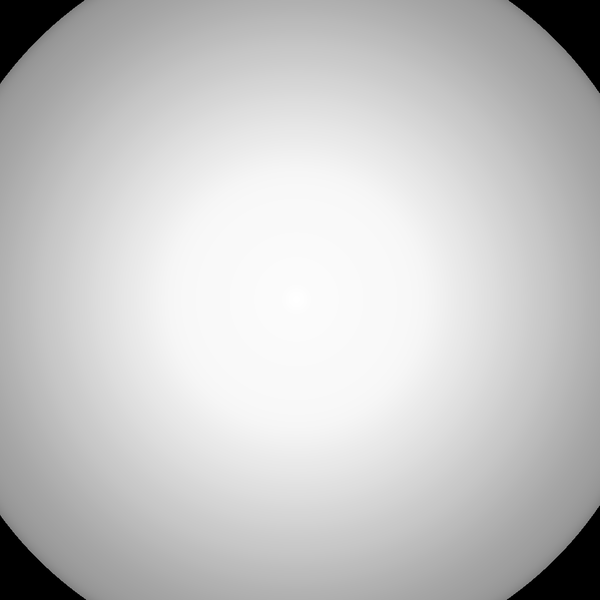

- vignette-calib : for vignette calibration. Features motion in front of a white wall with a calibration tag in the middle.

- imu-static : IMU data only, to estimate noise and random walk parameters of the IMU. The setup is standing still for 111 hours. For nympy format every row contains: timestamp, gyro_x, gyro_y, gyro_z, accel_x, accel_y, accel_z, temperature.

| Sequence name | Bag | Numpy | Duration |

| calib-imu-static2 | bag (27.55GB) | npy (4.77GB) | 111 hours |

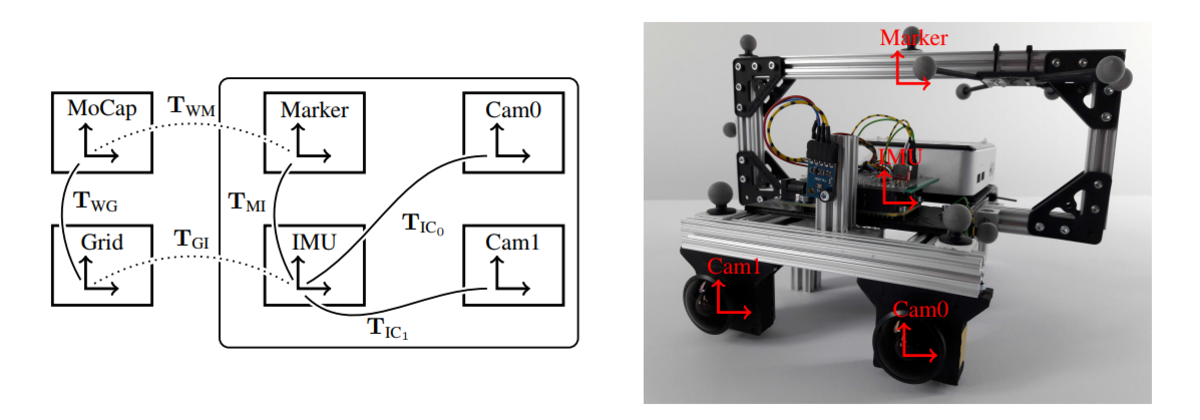

Hardware Description

Our sensor setup consists of two monochrome cameras in a stereo setup and an IMU. The IMU is rigidly connected to the two cameras and several IR-reflective markers which allow for pose tracking of the sensor setup by the motion capture (MoCap) system. For calibrating the camera intrinsics and the extrinsics of the sensor setup, we use a grid of AprilTags which has a fixed pose in the MoCap reference (world) system.

Geometric calibration

To perform geometric calibration the dataset contains several calibration sequences (calib-cam). They contain slow motions and well exposed calibration pattern ( AprilGrid) such that camera intrinsics and extrinsics can be estimated. As an example, we provide calibration files generated with kalibr for omidirectional (1024x1024), omidirectional (512x512) and pinhole (1024x1024) pinhole (512x512) camera models.

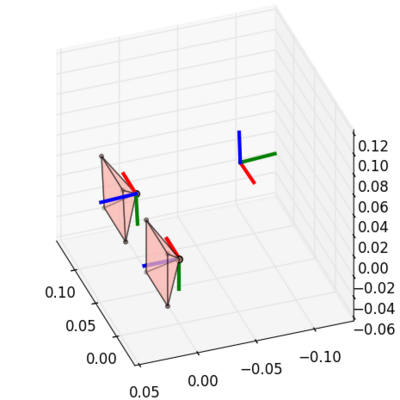

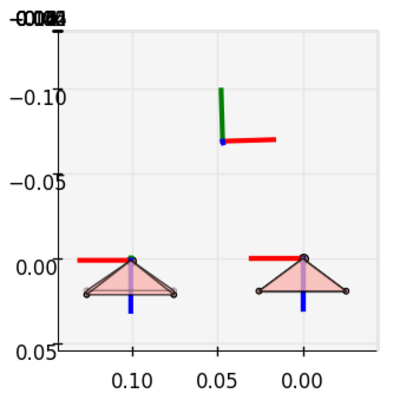

The following visualizes the camera and imu frames for one of the calibration results:

Photometric calibration

All images in the dataset have 16-bit intensity depth and linear response function. Vignetting calibration using the method from TUM Monocular dataset is provided here. Vignette (for both resolutions) and photometric response calibration files (which is linear) in the format that DSO expects can be downloaded here. We also include vignette calibration datasets (calib-vignette). This makes it possible to repeat the calibration procedure or use alternative tools for vignetting estimation.

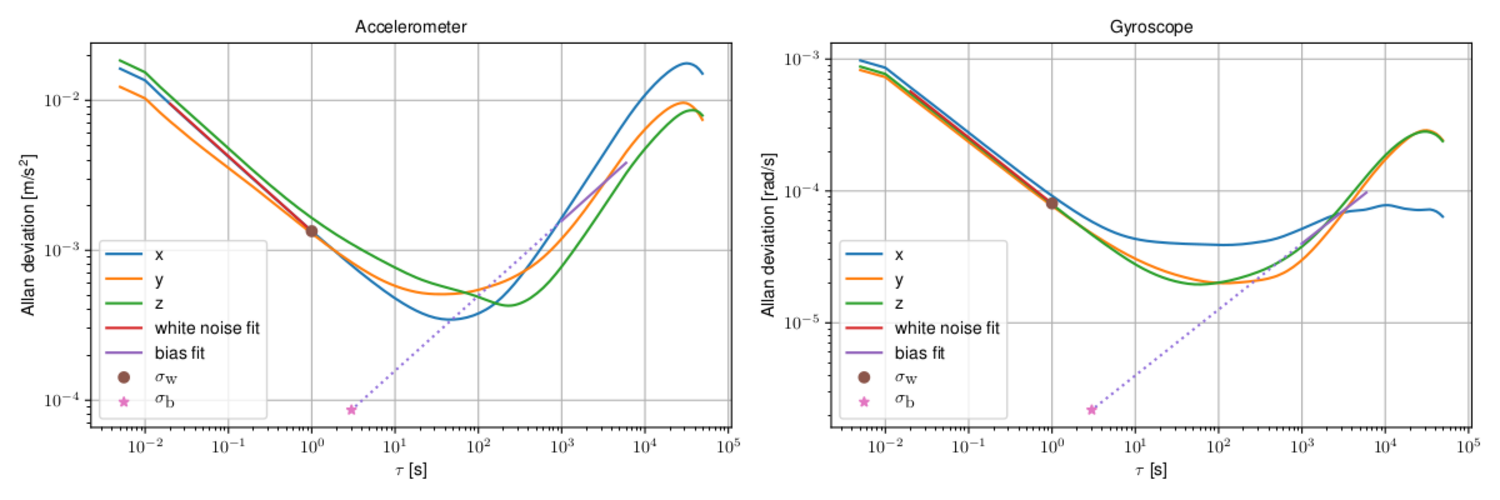

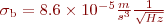

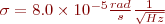

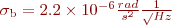

IMU Noise Density

The numerical values of noise densities  can be found at an integration time of

can be found at an integration time of  on the straight line with slope

on the straight line with slope  , while bias parameters

, while bias parameters  are identified as the value on the straight line with slope

are identified as the value on the straight line with slope  at an integration time of

at an integration time of  . This results in

. This results in  ,

,  for the accelerometer and

for the accelerometer and  ,

,  for the gyroscope.

for the gyroscope.

rostopic: /imu0 update_rate: 200.0 # Hz # Values from allan plots # sequence: dataset-calib-imu-static2.bag (full data range) #accelerometer_noise_density: 0.0014 # m/s^1.5 #accelerometer_random_walk: 0.000086 # m/s^2.5 #gyroscope_noise_density: 0.000080 # rad/s^0.5 #gyroscope_random_walk: 0.0000022 # rad/s^1.5 # Inflated values (to account for unmodelled effects) # Those values work well with Kalibr cam-imu calibration. # - white noise multiplied by 2 # - bias random walk multiplied by 10 accelerometer_noise_density: 0.0028 # m/s^1.5 accelerometer_random_walk: 0.00086 # m/s^2.5 gyroscope_noise_density: 0.00016 # rad/s^0.5 gyroscope_random_walk: 0.000022 # rad/s^1.5

Evaluation Results

We provide evaluation of several state-of-the-art VI methods on our dataset. For quantitative evaluation please refer to the paper. Here we provide the visualization of resulting trajectories (clicking on the method name toggles visibility).

The presented results for OKVIS are obtained with synchronous processing, without enforcing real-time and otherwise default parameters. Noise parameters are set to inflated values from the Allan plots (inflated 50 times for white noise and 20 times for bias random walk, which gave the best result in a simple parameter search). For your reference we provide the configuration file we used for OKVIS.

ROVIO is run in a similar configuration to OKVIS (default parameters, synchronous, no real-time enforcement, noise values from Allan plot inflated 10 times for all parameters as determined by a simple parameter search). For your reference we provide the configuration file we used for ROVIO.

Vins-Mono and Basalt VIO are also run using the default parameters.

Raw Data

To download all raw bags at once use the following command:

wget -R "index.*" -m -np -nH --no-check-certificate -e robots=off https://cdn3.vision.in.tum.de/tumvi/raw # optionally verify md5 sums: cd tumvi/raw md5sum -c *.md5

| Sequence name | Download raw dataset | Video | Video 5x |

| calib-cam1 | bag (1.71GB) | play | play (5x) |

| calib-cam2 | bag (1.10GB) | play | play (5x) |

| calib-cam3 | bag (1.32GB) | play | play (5x) |

| calib-cam4 | bag (1.03GB) | play | play (5x) |

| calib-cam5 | bag (1.48GB) | play | play (5x) |

| calib-cam6 | bag (1.39GB) | play | play (5x) |

| calib-cam7 | bag (0.59GB) | play | play (5x) |

| calib-cam8 | bag (0.79GB) | play | play (5x) |

| calib-imu1 | bag (4.06GB) | play | play (5x) |

| calib-imu2 | bag (4.15GB) | play | play (5x) |

| calib-imu3 | bag (3.80GB) | play | play (5x) |

| calib-imu4 | bag (3.44GB) | play | play (5x) |

| calib-vignette2 | bag (8.66GB) | play | play (5x) |

| calib-vignette3 | bag (6.71GB) | play | play (5x) |

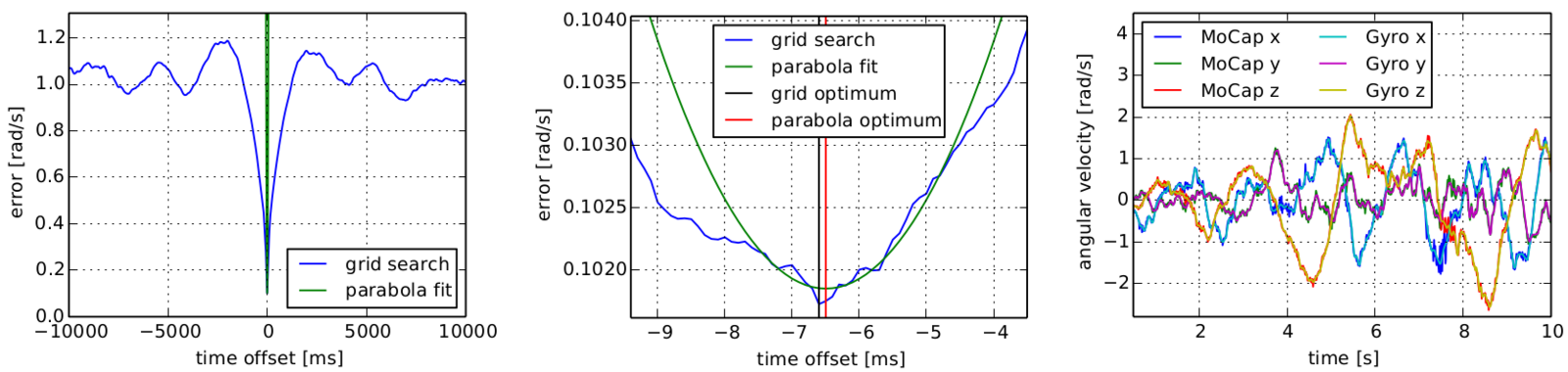

Time Alignment, Hand-Eye Calibration, IMU Axis Scaling and Misalignment

To estimate time offsets and transformation between marker and IMU frame we formulate a least squares problem that jointly estimates those variables. The results of this optimization on calib-imu1 sequence are presented below. Those values were used to obtain calibrated datasets from raw data.

Please note that the calibrated datasets already have corrected IMU measurements and Ground Truth in IMU frame.

T_i_c0:

-0.999506 0.00759167 -0.030488 0.0447659

0.0302105 -0.0343071 -0.998955 -0.0755245

-0.00862969 -0.999383 0.0340608 -0.0465419

0 0 0 1

T_i_c1:

-0.999497 0.00813335 -0.0306525 -0.0561178

0.0307588 0.0132798 -0.999439 -0.0738562

-0.00772172 -0.999879 -0.0135233 -0.0494102

0 0 0 1

T_w_moc:

-0.999641 0.0266075 -0.00313757 0.146549

-0.00698843 -0.145899 0.989275 -0.192952

0.0258644 0.988942 0.146032 0.766471

0 0 0 1

T_mark_i:

0.99975 -0.0167084 0.0148858 -0.0105073

0.0166986 0.99986 0.000784897 -0.0596456

-0.0148968 -0.00053613 0.999889 -0.0389606

0 0 0 1

cam_time_offset_ns: 126788

mocap_time_offset_ns: -6022600

accel_bias: -1.30318 -0.391441 0.380509

accel_scale:

1.00422 0 0

-7.82123e-05 1.00136 0

-0.0097745 -0.000976476 0.970467

gyro_bias: 0.0283122 0.00723077 0.0165292

gyro_scale:

0.943611 0.00148681 0.000824366

0.000369694 1.09413 -0.00273521

-0.00175252 0.00834754 1.01588

For all sequences (except vignette-calib and imu-static) the time alignment with ground truth data is performed by aligning gyro measurements and rotational velocities obtained from differentiating ground truth orientations.

The estimated time offsets in nanoseconds between IMU and MoCap clocks are summarized in the following table. These can be used when working with the raw sequences:

calib-cam1: -55867441862836 calib-cam2: -55867445161167 calib-cam3: -55867449676681 calib-cam4: -55867452091116 calib-cam5: -55867461457686 calib-cam6: -55868859813923 calib-cam7: -76223347808270 calib-cam8: -76223349465383 calib-imu1: 1946129713247 calib-imu2: 1946128794464 calib-imu3: 1946122260117 calib-imu4: 1946119662908 corridor1: -2649339151542 corridor2: -88258330072435 corridor3: -88258341012132 corridor4: -88258450609044 corridor5: -88258473139910 magistrale1: -27299817805089 magistrale2: -27299882020602 magistrale3: -27299912519559 magistrale4: -27299943669587 magistrale5: -27300085454085 magistrale6: -88258358292777 outdoors1: -95213831426975 outdoors2: -99816919490861 outdoors3: -105135071561239 outdoors4: -106677025259965 outdoors5: -106677073378626 outdoors6: -27299744860311 outdoors7: -50219955417353 outdoors8: -71928330343879 room1: -2649297394118 room2: -2649308409992 room3: -2649314077241 room4: -2649317990030 room5: -2649326786209 room6: -88258447125234 slides1: -50219881655625 slides2: -50219893201666 slides3: -50219907795270

FAQ

Q: Playback with rosbag play stutters. Are there framedrops?

We checked for framedrops during recording and there should be none. If you notice actual framedrops, please do let us know!

The rosbag play issue might be caused by a long standing limitation of rosbag that causes such delays during playback. We choose a larger chunksize (50MB), since otherwise opening very large bag files takes long (i.e. even just calling rosbag info would otherwise take minutes). The downside is that during playback the current implementation of rosbag play loads each chunk fully before playing any message from it. See also https://github.com/ros/ros_comm/issues/117 for more info on this issue.

One solution for the smaller bags is to "rebag" them with the default chunk size and then work with those:

rosrun rosbag fastrebag.py dataset-room1_512_16.bag dataset-room1_512_16_small_chunks.bag

External Usage and Tools

Below are some known usage examples and/or tools of other people concerning our dataset. We provide this for convenience, but inclusion in this list does not imply our endorsement or promise of any particular functionality. We thank the respective authors for sharing. If you would like to be added to the list, please send us an email.

* Image undistortion example with OpenCV Python: https://github.com/HTLife/tumvi_fisheye_calib

License

All data in the Visual Inertial Dataset is licensed under a Creative Commons 4.0 Attribution License (CC BY 4.0) and the accompanying source code is licensed under a BSD-2-Clause License.

Video of All Sequences