E-NeRF: Neural Radiance Fields from a Moving Event Camera

Code

Code can be found on https://github.com/knelk/enerf.

Abstract

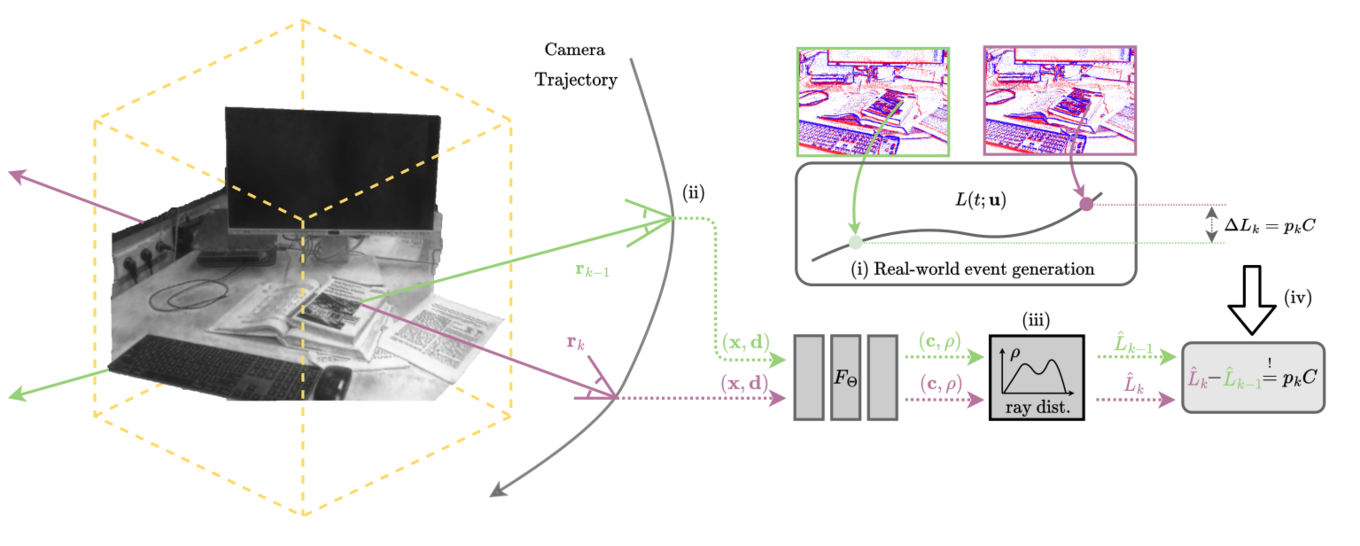

Estimating neural radiance fields (NeRFs) from ideal images has been extensively studied in the computer vision community. Most approaches assume optimal illumination and slow camera motion. These assumptions are often violated in robotic applications, where images contain motion blur and the scene may not have suitable illumination. This can cause significant problems for downstream tasks such as navigation, inspection or visualization of the scene. To alleviate these problems we present E-NeRF, the first method which estimates a volumetric scene representation in the form of a NeRF from a fast-moving event camera. Our method can recover NeRFs during very fast motion and in high dynamic range conditions, where frame-based approaches fail. We show that rendering high-quality frames is possible by only providing an event stream as input. Furthermore, by combining events and frames, we can estimate NeRFs of higher quality than state-of-the-art approaches under severe motion blur. We also show that combining events and frames can overcome failure cases of NeRF estimation in scenarios where only few input views are available, without requiring additional regularization.

Datasets

Synthetic datasets were simulated using esim.

Please refer to tumvie and eds for the real-world data experiments.

Publications

Will be presented at IROS 2023.

If you use our work, code or dataset, please cite:

Export as PDF, XML, TEX or BIB

Journal Articles

2023

[]

E-nerf: Neural radiance fields from a moving event camera , In IEEE Robotics and Automation Letters, IEEE, volume 8, 2023. ([project page])