Software

We provide the following software libraries as open-source to the scientific community.

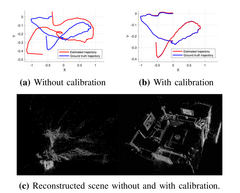

| Our implementation for online photometric calibration can be found on GitHub. For more information, see also photometric-calibration. The method is described in our research paper: Online Photometric Calibration of Auto Exposure Video for Realtime Visual Odometry and SLAM (P. Bergmann, R. Wang, D. Cremers), In IEEE Robotics and Automation Letters (RA-L), volume 3, 2018. |

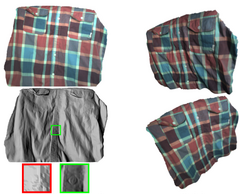

| This software framework provides MATLAB (or CUDA) code to perform depth map super-resolution using uncalibrated photometric stereo. The method is described in our research paper: Depth Super-Resolution Meets Uncalibrated Photometric Stereo (S. Peng, B. Haefner, Y. Quéau and D. Cremers), In ICCV Workshop on Color and Photometry in Computer Vision, 2017. |

| This CAPTCHA Recognition framework provides a C++/Python code to recognize CAPTCHAs using Active Deep Learning approach. This method is described in our research paper: CAPTCHA Recognition with Active Deep Learning (F. Stark and C. Hazırbaş and R. Triebel and D. Cremers), In GCPR Workshop on New Challenges in Neural Computation, 2015. |

| The Automatic Feature Selection (AFS) framework provides a C++ code to select the best visual object recognition features for semantic segmentation problems as described in our research paper: Optimizing the Relevance-Redundancy Tradeoff for Efficient Semantic Segmentation (C. Hazırbaş, J. Diebold, D. Cremers), In Scale Space and Variational Methods in Computer Vision (SSVM), 2015. |

| This link provides our framework as well as our small interactive RGB-D benchmark (RGB-D images, scribbles and ground truth labelings) for our research paper Interactive Multi-label Segmentation of RGB-D Images (J. Diebold, N. Demmel, C. Hazırbaş, M. Möller, D. Cremers), In Scale Space and Variational Methods in Computer Vision (SSVM), 2015. |

| This code implements the real-time algorithm for the Mumford-Shah functional as described in the following research paper: Real-Time Minimization of the Piecewise Smooth Mumford-Shah Functional (E. Strekalovskiy, D. Cremers), In European Conference on Computer Vision (ECCV), 2014. |

| A binary implementing the proportion priors multilabel approach from the following paper: Proportion Priors for Image Sequence Segmentation (C. Nieuwenhuis, E. Strekalovskiy, D. Cremers), In IEEE International Conference on Computer Vision (ICCV), 2013. With this binary you can read in the data terms and the proportions and write out the resulting labeling. Also included are some example inputs so that one can see right away how to use it. Implementation by Evgeny Strekalovskiy, on GPU using CUDA. Download Binary: proportion_priors.zip |

| For our EdX course on autonomous quadrotors, we developed a JavaScript-based quadrotor simulator that runs directly in the browser. You can program this simulator using Python. You can also download the simulator for offline use. However, you need to activate JavaScript in your browser to run on local html pages. Alternatively, you can set up your own (local) webserver. |

| This code implements the approach for real-time 3D mapping on a CPU as described in the following research paper: Volumetric 3D Mapping in Real-Time on a CPU (F. Steinbruecker, J. Sturm, D. Cremers), In Int. Conf. on Robotics and Automation, 2014. |

| The cudaMultilabelOptimization library can be used to a) solve multi-label optimization problems based on the Potts model by means of continuous optimization and the primal-dual algorithm on the GPU (see IJCV 2013), b) compute a powerful data term for interactive image segmentation (see PAMI2013). The code was implemented by Claudia Nieuwenhuis – Download Source Code: cudaMultilabelOptimization-v1.0.zip - September 10, 2013 (LGPL) |

| The dvo packages provide an implementation of visual odometry estimation from RGB-D images for ROS. In contrast to feature-based algorithms, the approach uses all pixels of two consecutive RGB-D images to estimate the camera motion. The implementation runs in realtime on a recent CPU. |

| The tum_ardrone ROS package enables the Parrot AR.Drone to fly autonomously, using visual, PTAM-based Navigation. The documentation and code can be found here |

| We provide an extension of the Gazebo simulator that can be used as a transparent replacement for the Parrot AR.Drone quadrocopter. This allows to develop and evaluate algorithms more quickly because they don't need to be run on a real quadrocopter all the time. The software (including documentation) has been released as an open-source package in ROS: tum_simulator |

| The PlanarCut library computes max-flow/min-s-t-cut on planar graphs. It implements an efficient algorithm, which has almost linear running time. The library also provides for several easy-to-use interfaces in order to define planar graphs that are common in computer vision applications (see CVPR 2009). The code was implemented by Eno Töppe and Frank R. Schmidt – Download Source Code: planatcut-v1.0.2.zip - September 22, 2011 (LGPL) |