Deep Virtual Stereo Odometry: Leveraging Deep Depth Prediction for Monocular Direct Sparse Odometry

Contact: Nan Yang, Rui Wang, Jörg Stückler, Prof. Daniel Cremers

ECCV 2018 oral presentation

Featured in Computer Vision News' Best of ECCV:

Abstract

Monocular visual odometry approaches that purely rely on geometric cues are prone to scale drift and require sufficient motion parallax in successive frames for motion estimation and 3D reconstruction. In this paper, we propose to leverage deep monocular depth prediction to overcome limitations of geometry-based monocular visual odometry. To this end, we incorporate deep depth predictions into DSO as direct virtual stereo measurements. For depth prediction, we design a novel deep network that refines predicted depth from a single image in a two-stage process. We train our network in a semi-supervised way on photoconsistency in stereo images and on consistency with accurate sparse depth reconstructions from Stereo DSO. Our deep predictions excel state-of-the-art approaches for monocular depth on the KITTI benchmark. Moreover, our Deep Virtual Stereo Odometry clearly exceeds previous monocular and deep-learning based methods in accuracy. It even achieves comparable performance to the state-of-the-art stereo methods, while only relying on a single camera.

Semi-Supervised Deep Monocular Depth Estimation

We propose a semi-supervised approach to deep monocular depth estimation. It builds on three key ingredients: self-supervised learning from photoconsistency in a stereo setup, supervised learning based on accurate sparse depth reconstruction by Stereo DSO, and StackNet, a two-stage network with a stacked encoder-decoder architecture.

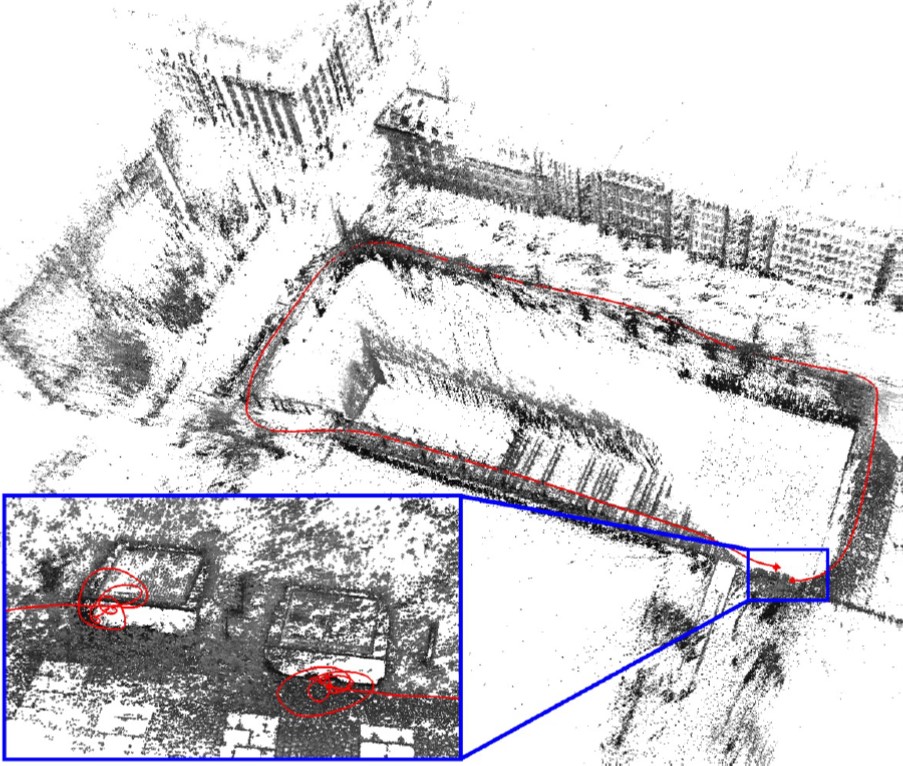

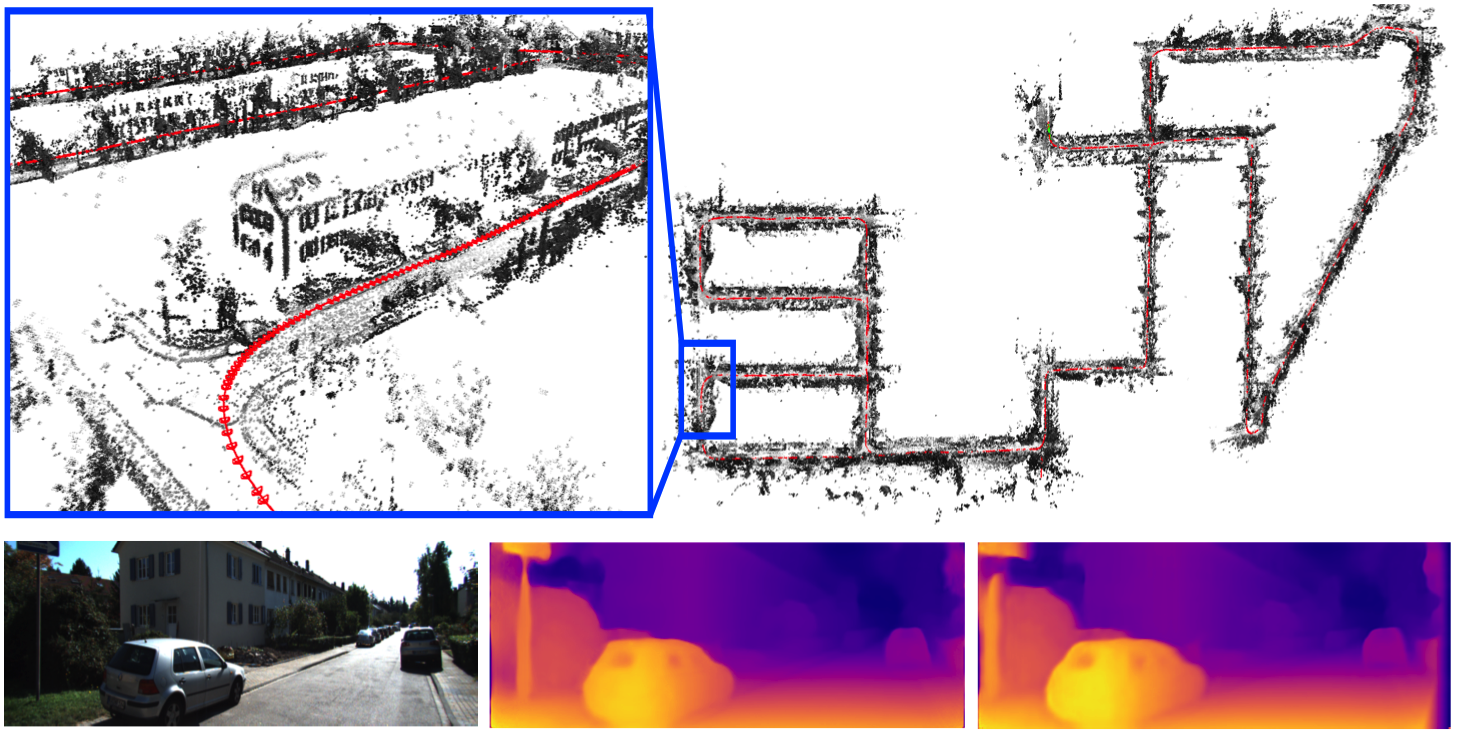

Deep Virtual Stereo Odometry

Deep Virtual Stereo Odometry (DVSO) builds on the windowed sparse direct bundle adjustment formulation of monocular DSO. We use our disparity predictions for DSO in two key ways: Firstly, we initialize depth maps of new keyframes from the disparities. Beyond this rather straightforward approach, we also incorporate virtual direct image alignment constraints into the windowed direct bundle adjustment of DSO. We obtain these constraints by warping images with the estimated depth by bundle adjustment and the predicted right disparities by our network assuming a virtual stereo setup.

Results

We quantitatively evaluate our StackNet with other state-of-the-art monocular depth prediction methods on the publicly available KITTI dataset. For DVSO, we evaluate its tracking accuracy on the KITTI odometry benchmark with other state-of-the-art monocular as well as stereo visual odometry systems. In the supplementary material, we also show the generalization ability of StackNet as well as DVSO.

Monocular Depth Estimation

Monocular Visual Odometry

Downloads

Trajectories of DVSO on KITTI 00-10: dvso_kitti_00_10.zip

Publications

Export as PDF, XML, TEX or BIB

Journal Articles

2018

[]

Direct Sparse Odometry , In IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018.

Conference and Workshop Papers

2021

[]

MonoRec: Semi-Supervised Dense Reconstruction in Dynamic Environments from a Single Moving Camera , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2021. ([project page])

2020

[]

D3VO: Deep Depth, Deep Pose and Deep Uncertainty for Monocular Visual Odometry , In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2020.

Oral Presentation

2018

[]

Deep Virtual Stereo Odometry: Leveraging Deep Depth Prediction for Monocular Direct Sparse Odometry , In European Conference on Computer Vision (ECCV), 2018. ([arxiv],[supplementary],[project])

Oral Presentation

2017

[]

Stereo DSO: Large-Scale Direct Sparse Visual Odometry with Stereo Cameras , In International Conference on Computer Vision (ICCV), 2017. ([supplementary][video][arxiv][project])