Technical University of Munich

School of Computation, Information and Technology

Informatics 9

Boltzmannstrasse 3

85748 Garching

Germany

Fax: +49-89-289-17757

Mail:

Research Interests

Correspondence Problems, Segmentation, SLAM, Variational Methods, Partial Differential Equations

Brief Bio

Frank Steinbrücker received his Bachelor's degree in 2007 and his Master's degree in Computer Science in 2008 at Saarland University (Germany). Since September 2008 he is a Ph.D. student in the Research Group for Computer Vision, Image Processing and Pattern Recognition at the University of Bonn headed by Professor Daniel Cremers.

Visual Odometry

At ICCV 2011 we published a method for getting a camera pose estimation from RGBD-Images. In the video below, the Kinect camera is moving in a static scene and the camera poses are being accurately estimated.

Dense Mapping of large RGB-D Sequences

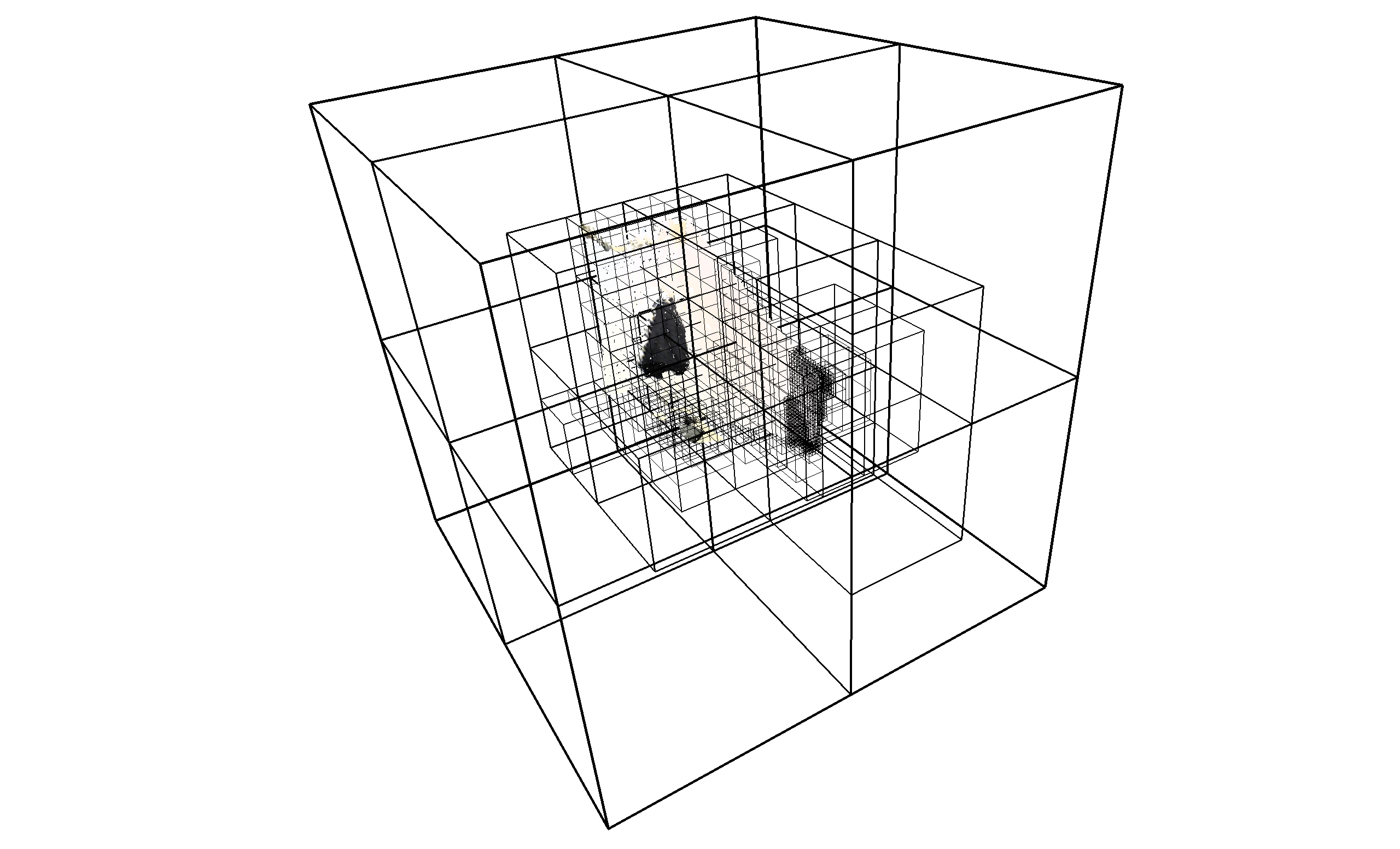

In our publication at ICCV 2013 I describe a method for the volumetric fusion of large RGB-D sequences. The video below shows the mesh visualization of our office floor, a scene computed from more than 24.000 RGB-D images captured with the Asus Xtion sensor. The reconstruction run at more than 200 Hz on a GTX680. The finest resolution was 5mm and the entire scene fit into approximately 2.5 GB of GPU RAM, including color.

While the method published at ICCV 2013 required a GPU to run in real-time, in our paper published at ICRA 2014, we demonstrated that the mapping part of dense volumetric RGB-D image fusion also works on a single standard CPU core at camera speed. Furthermore, we describe a method for incrementally extracting mesh surfaces from the volumetric data in approximately 1 Hz on a separate CPU core. In comparison to ray-casting visualization methods, surface meshes have the benefit that the visualization is view-independent. Therefore, this method is applicable for transmitting the visualization from an embedded system to a base-station. The video below demonstrates our method published at ICRA 2014.

Publications

Export as PDF, XML, TEX or BIB